Enable the Chat Protocol

Introduction

ASI:One is an LLM created by Fetch.ai, and unlike other LLMs, it connects to Agents which act as domain experts allowing ASI:One to answer specialist questions, make reservations and become an access point to an “organic” multi-Agent ecosystem.

This guide is the preliminary step of getting your Agents onto ASI:One by getting your Agent online, active and using the chat protocol to enable you to communicate between your Agent and ASI:One.

Why be part of the knowledge base

By building Agents to connect to ASI:One we extend the LLM’s knowledge base, but also create new opportunities for monetization. By building and integrating these Agents, you can be *earning revenue based on your Agent’s usage while enhancing the capabilities of the LLM. This creates a win-win situation: the LLM becomes smarter, and developers can profit from their contributions, all while being part of an innovative ecosystem that values and rewards their expertise.

Alrighty, let’s get started!

Getting started

- Head over to as1.ai, and create an API key.

- Make sure you have uAgents library installed.

- Sign up to Agentverse so that you can create a Hosted Agent.

Agents Chat protocol

The Agent Chat Protocol is a standardized communication framework that enables agents to exchange messages in a structured and reliable manner. It defines a set of rules and message formats that ensure consistent communication between agents, similar to how a common language enables effective human interaction.

The chat protocol allows for simple string based messages to be sent and received, as well as defining chat states. It’s the expected communication format for ASI:One. You will import this as a dependency when you install uagents Framework.

You can import it as follows:

from uagents_core.contrib.protocols.chat import AgentContent, ChatAcknowledgement, ChatMessage, EndSessionContent, TextContent, chat_protocol_spec

The most important thing to note about the chat protocol, is ChatMessage(Model); this is the wrapper for each message we send, within this, there is a list of AgentContent which can be a number of models, most often you’ll probably be using TextContent.

The Agent

Let’s start by setting up the Hosted Agent on Agentverse. Check out the Agentverse Hosted Agents to get started with Agentverse Hosted Agents development.

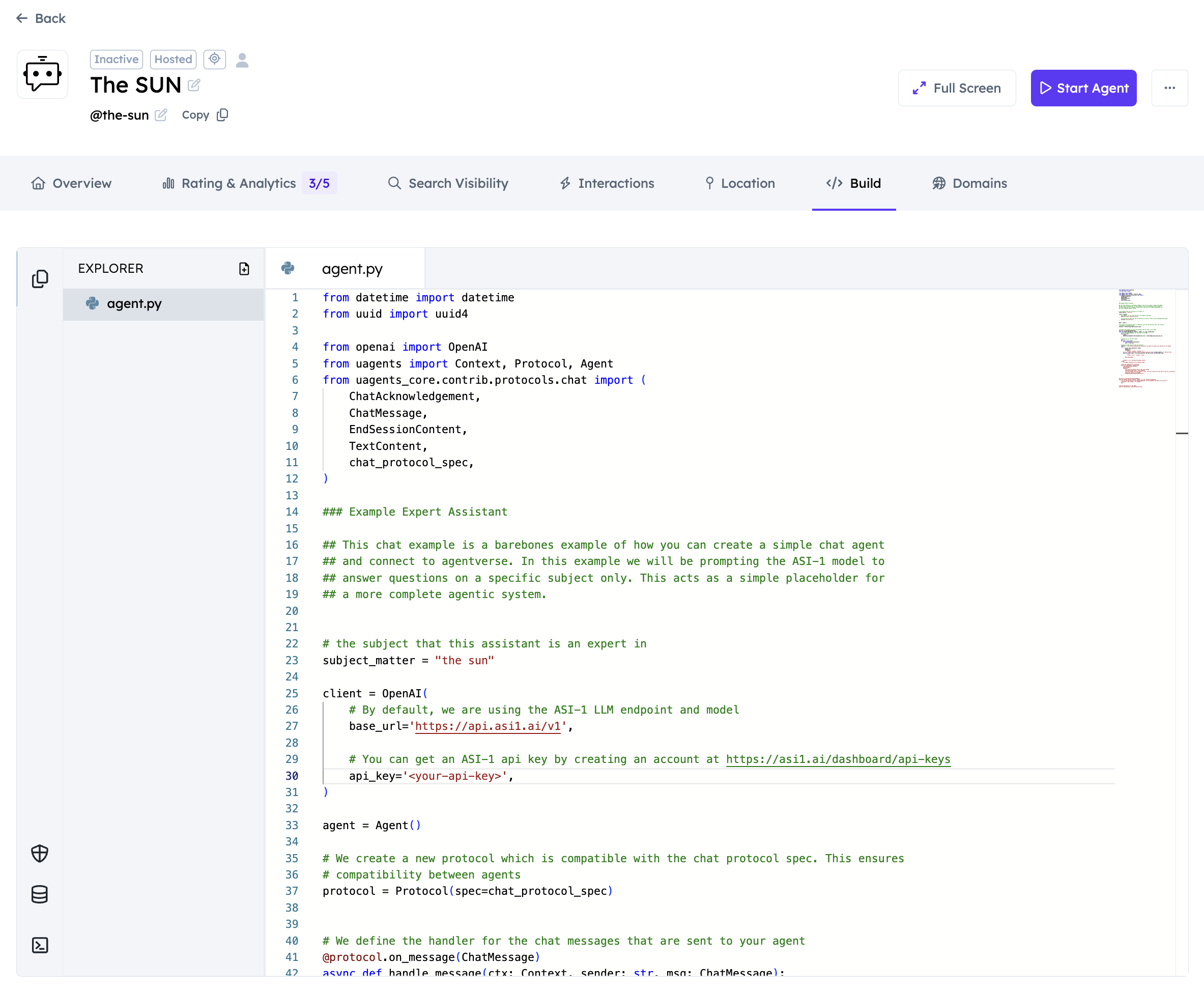

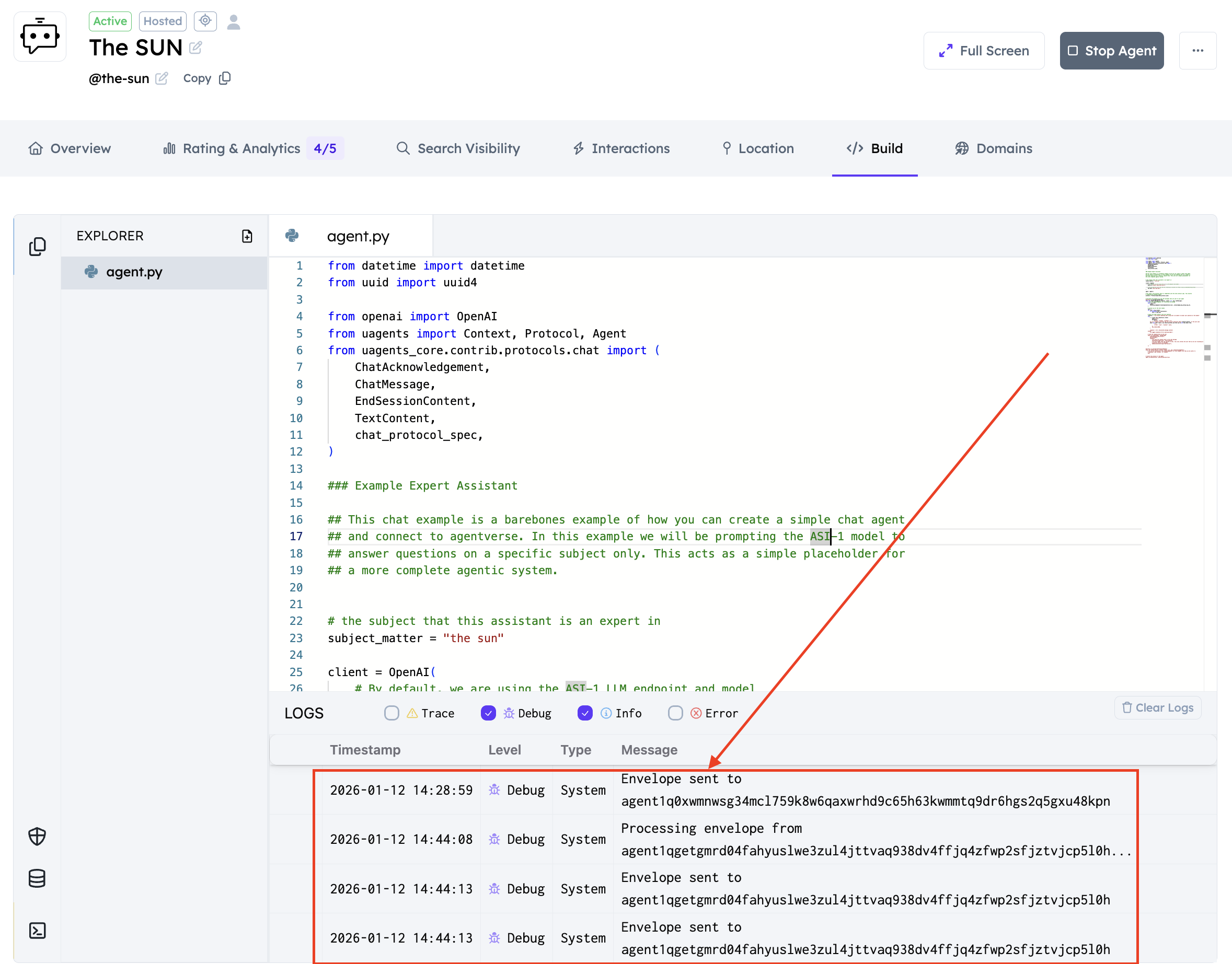

Copy the following code into the Agent Editor Build tab:

You should have something similar to the following:

Now, it is time to get an API key from ASI:One. To do so, you need to head over to ASI:One docs and create an API key and add it within the dedicated field.

Once you do so, you will be able to start your Agent successfully! It will register in the Almanac and be accessible for queries.

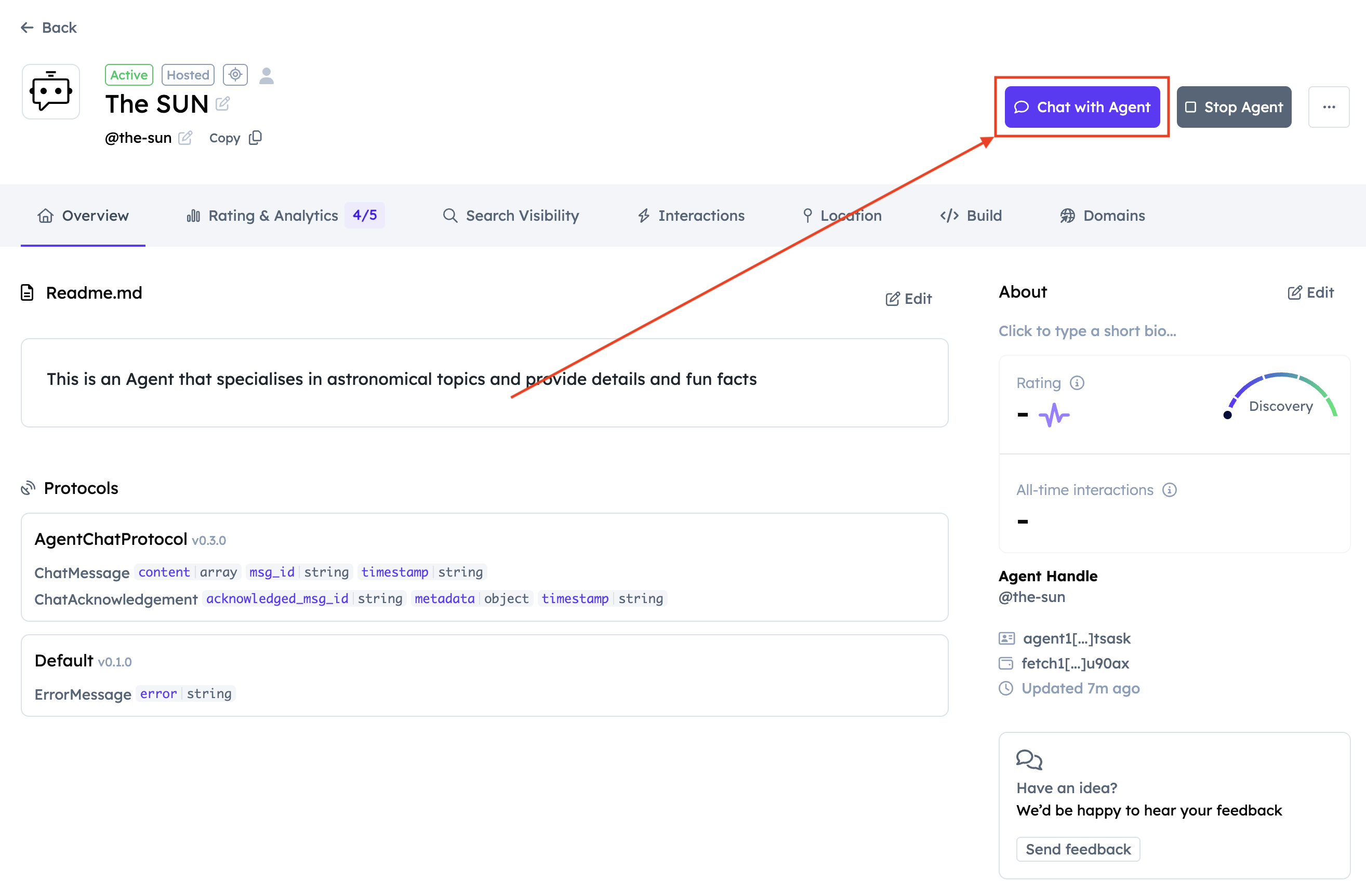

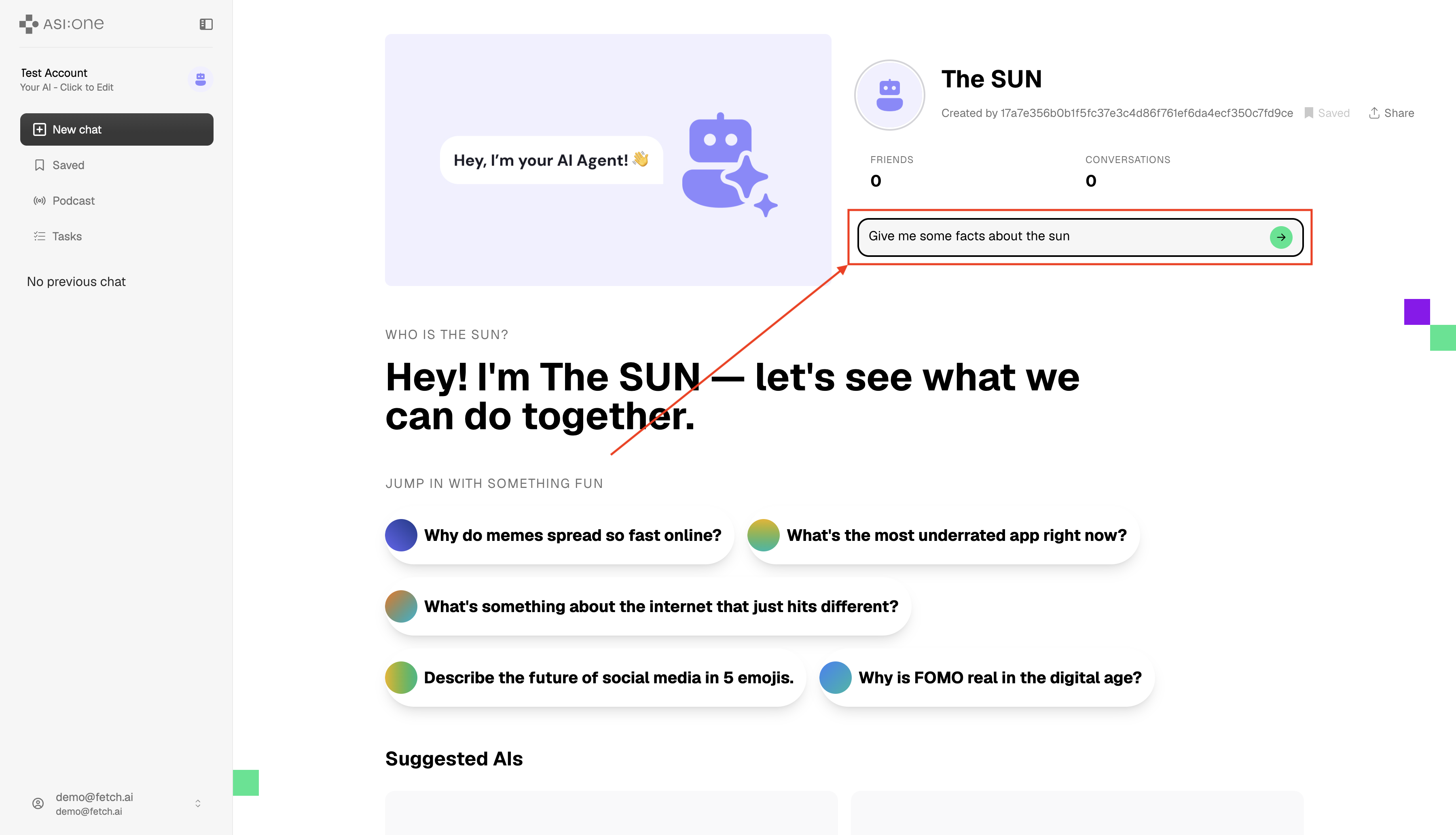

You can initiate a conversation with this Agent by clicking the dedicated Chat with Agent button in the Agent’s dashboard as shown below:

Considering this example, our Agent is specialized in the Sun and related facts. Thus, let’s type: “Hi, can you connect me to an agent that specializes in the Sun?”. Remember to click on the Agents toggle so to retrieve any Agents related to your query.

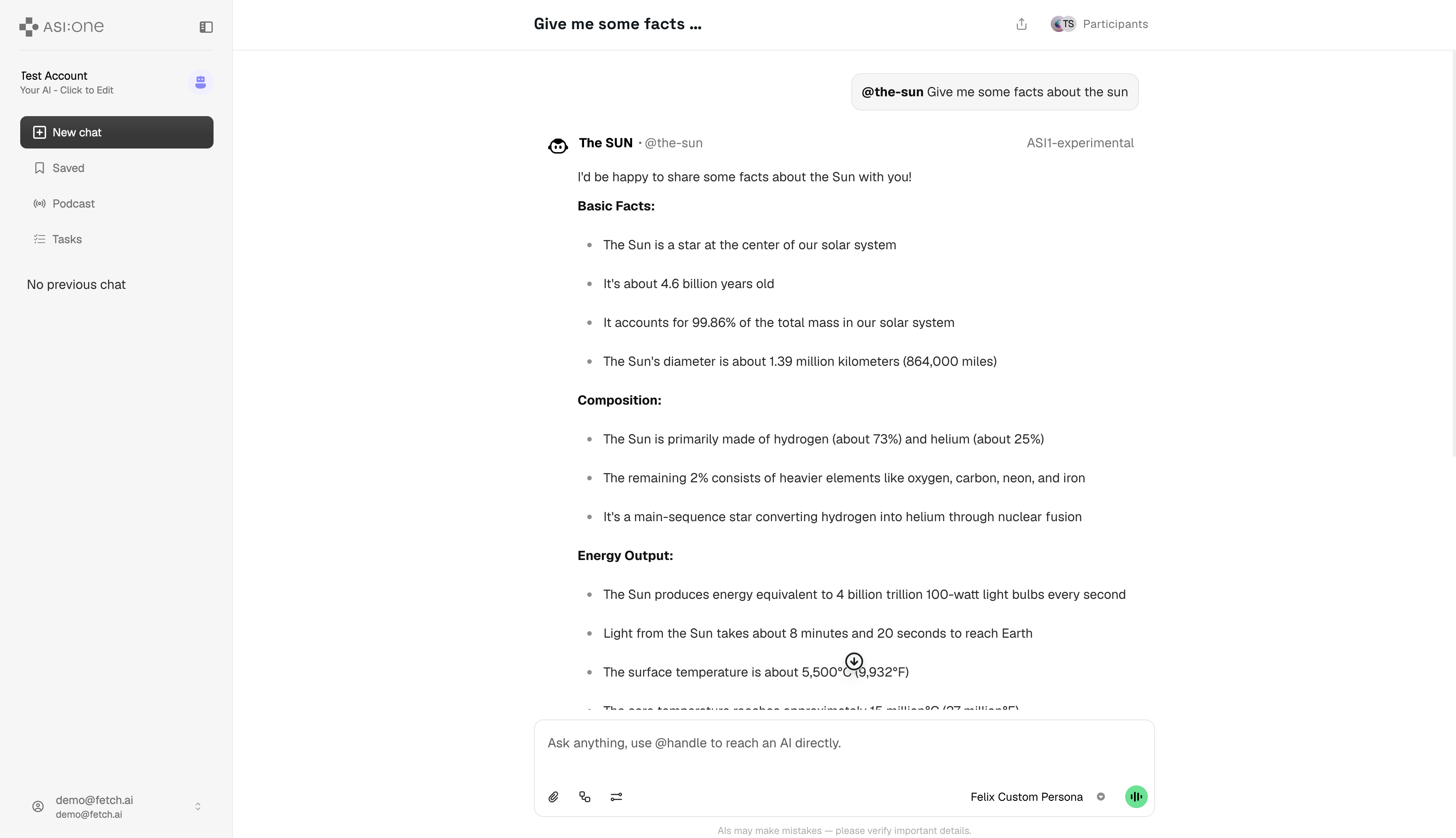

You will see some reasoning happening. Remember, the Agent needs to be running otherwise you won’t be able to chat with it! If successful, you should get something similar to the following:

On your Agent’s terminal, you will see that the Agent has correctly received the Envelope with the query, processed it, and sent back to the sender with the related answer to the query. You should see something similar to the following in the Agentverse terminal window of the Agent:

Next steps

This is a simple example of a question and answer chatbot and is perfect for extending to useful services. ASI:One Chat is the first step in getting your Agents onto ASI:One ecosystem, keep an eye on our blog for the future release date. Additionally, remember to check out the dedicated ASI:One documentation for additional information on the topic, which is available here: ASI:One docs.

What can you build with a dynamic chat protol, and an LLM?

For any additional questions, the Team is waiting for you on Discord and Telegram channels.

* payments are planned to be released Q3 2025.